The story of a guest

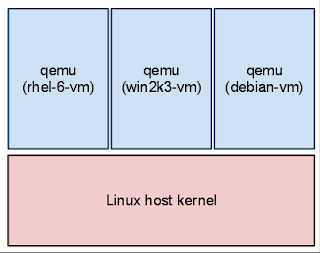

A guest is created by running the qemu program, also known as qemu-kvm or just kvm. On a host that is running 3 virtual machines there are 3 qemu processes:

When a guest shuts down the qemu process exits. Reboot can be performed without restarting the qemu process for convenience although it would be fine to shut down and then start qemu again.

Guest RAM

Guest RAM is simply allocated when qemu starts up. It is possible to pass in file-backed memory with -mem-path such that hugetlbfs can be used. Either way, the RAM is mapped in to the qemu process' address space and acts as the "physical" memory as seen by the guest:

QEMU supports both big-endian and little-endian target architectures so guest memory needs to be accessed with care from QEMU code. Endian conversion is performed by helper functions instead of accessing guest RAM directly. This makes it possible to run a target with a different endianness from the host.

KVM virtualization

KVM is a virtualization feature in the Linux kernel that lets a program like qemu safely execute guest code directly on the host CPU. This is only possible when the target architecture is supported by the host CPU. Today KVM is available on x86, ARMv8, ppc, s390, and MIPS CPUs.

In order to execute guest code using KVM, the qemu process opens /dev/kvm and issues the KVM_RUN ioctl. The KVM kernel module uses hardware virtualization extensions found on modern Intel and AMD CPUs to directly execute guest code. When the guest accesses a hardware device register, halts the guest CPU, or performs other special operations, KVM exits back to qemu. At that point qemu can emulate the desired outcome of the operation or simply wait for the next guest interrupt in the case of a halted guest CPU.

The basic flow of a guest CPU is as follows:

open("/dev/kvm")

ioctl(KVM_CREATE_VM)

ioctl(KVM_CREATE_VCPU)

for (;;) {

ioctl(KVM_RUN)

switch (exit_reason) {

case KVM_EXIT_IO: /* ... */

case KVM_EXIT_HLT: /* ... */

}

}The host's view of a running guest

The host kernel schedules qemu like a regular process. Multiple guests run alongside without knowledge of each other. Applications like Firefox or Apache also compete for the same host resources as qemu although resource controls can be used to isolate and prioritize qemu.

Since qemu system emulation provides a full virtual machine inside the qemu userspace process, the details of what processes are running inside the guest are not directly visible from the host. One way of understanding this is that qemu provides a slab of guest RAM, the ability to execute guest code, and emulated hardware devices; therefore any operating system (or no operating system at all) can run inside the guest. There is no ability for the host to peek inside an arbitrary guest.

Guests have a so-called vcpu thread per virtual CPU. A dedicated iothread runs a select(2) event loop to process I/O such as network packets and disk I/O completion. For more details and possible alternate configuration, see the threading model post.

The following diagram illustrates the qemu process as seen from the host:

Further information

Hopefully this gives you an overview of QEMU and KVM architecture. Feel free to leave questions in the comments and check out other QEMU Internals posts for details on these aspects of QEMU.

Here are two presentations on KVM architecture that cover similar areas if you are interested in reading more:

- Jan Kiszka's Linux Kongress 2010 presentation on the Architecture of the Kernel-based Virtual Machine (KVM). Very good material.

- My own attempt at presenting a KVM Architecture Overview from 2010.